As I wrote the title of this post, I suddenly realized: “There is nothing sexy about the words “generative music.” Just hearing them places you in the land of people in lab coats, looking at Matrix-like code, and listening to really unmusical, experimental music.

After you know what generative music is (see: prior post covering its definition), you might feel pangs of anger, confusion, and befuddlement. I mean, what’s so sexy about losing creative control? This is what generative music forces you to grapple with: letting the machines make music for you. In Beat Connection, we’ve explored how to take the reins of your music. Today, let’s ease that grip a bit. Let’s find the ghost in the machine.

Cluster analysis.

– Brian Eno and Peter Schmidt, From Oblique Strategies

As we’ll discover, generative music software is comprised of tools that we can use to spur our own creativity. Maybe to get us out of a rut, or to use as a base from which to build, or maybe to let the thing do its thing and truly surprise you. Trust me, it’s sexy to discover things that are truly out of your control. There is something inherently provocative about understanding the ins and outs (at least superficially) of things that even people who create the software are struggling to understand.

Generative music software that we’ll first explore on the PC, reexamines how we look at music, taking it apart so that one can truly mine their interfaces for new ideas. In my opinion, generative music software is better at explaining how to construct music to the self-taught musician than reams of musical theory. In a weird way, as music has become increasingly piped into our world via algorithms, generative music software seems more “natural” to comprehend for anyone entirely alien to the music creation process itself.

Let’s flip the logic back to us, the creators. What tools are out there, on a personal computer, that allow us to “create” generative music? Below, we’ll explore them. In a future post, we’ll look at tools for tablet devices that introduce tactile feel to a seemingly two-dimensional world.

Wotja: Generative Music

Wotja, one of the entryways to generative music, has been with us since the beginning when translating such ideas to PCs was in its infancy. In the very early ’90s, then known as KOAN, it was a bit of software developed by England-based SSEYO and used by Brian Eno to create his Generative Music 1 with SSEYO Koan software. In time, KOAN, segued to become Noatikl, then, to its current iteration as Wotja.

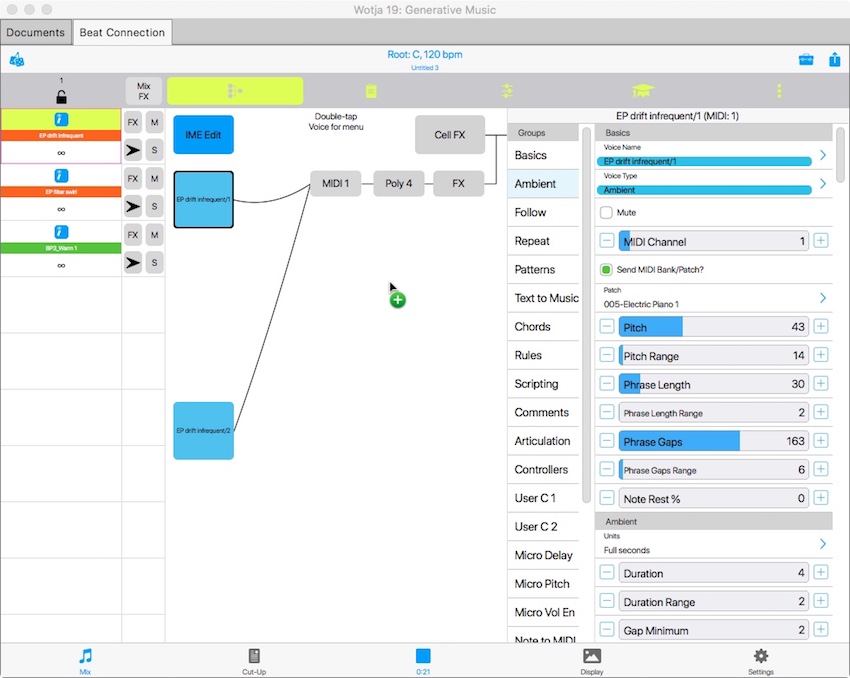

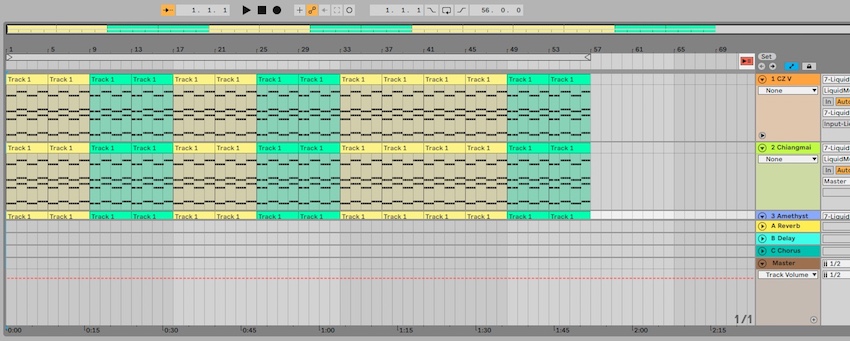

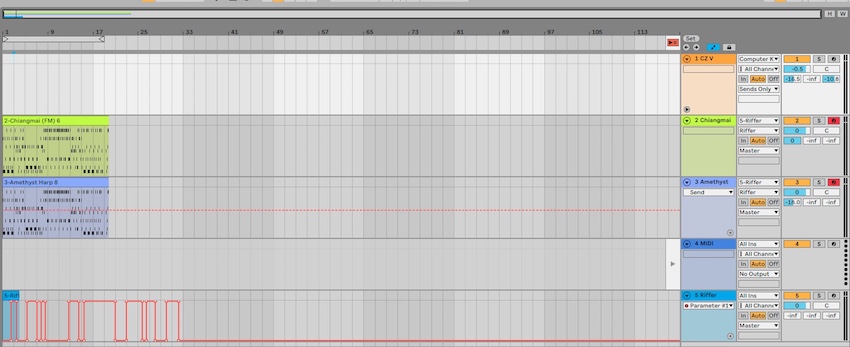

As seen above, Wotja takes apart the granular ideas of musical terms we know, giving us deep control over what the software will try to understand as your musical instructions. Cells, modules, and tracks easily let you create a generative source and pipe out notes that follow musical logic-based ideas.

A really deep program, Wotja can auto-generate whole performances called “Mixes” where its built-in synth engine randomly loads different instruments and uses pre-determined templates to generate musical moods like “swirl,” “drift,” and “warm 1,” etc. Edits in the autogenerated mix reveal different types of instrument playback: some follow the melodies of other tracks, others cycle through “fixed patterns,” while others are designated as “ambient” sources holding notes for prolonged periods.

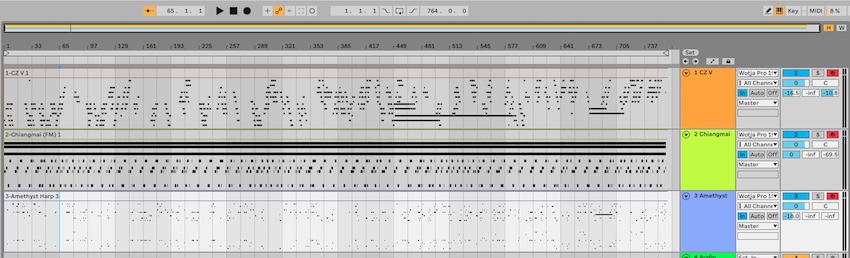

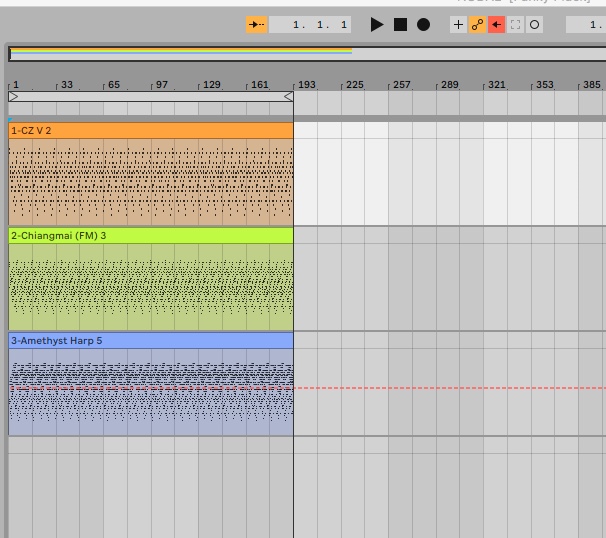

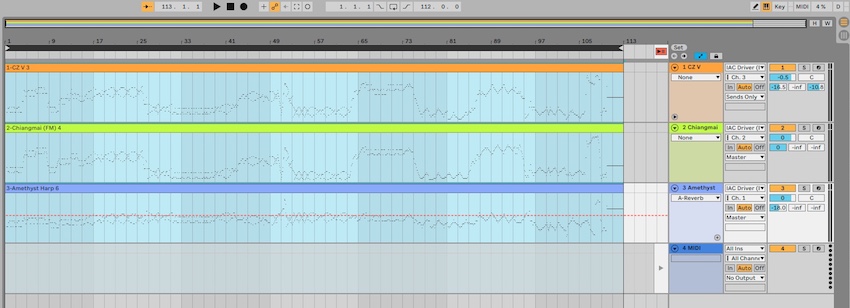

For example, sliders in a group called “Phrase Gap” control the duration in seconds between musical phrases. Other sliders like “Chord Depth” control the chance that musical intervals will be taken among chordal changes. In the above sound example, I created a simple three-track mix featuring a pad, a keys sound, and a lead type of sound. Equally, on Ableton Live, I selected patches from Arturia’s CZ-V, Korg Gadget’s Chiangmai, and Ableton Live’s Tension which would sound sympathetic to the melodic unfurling. For 20-some odd minutes (once Ableton Live was receiving Wotja’s MIDI signals) I let it do its thing and recorded the outcome of this generated music.

Wotja works both as standalone software (that can actually generate and mixdown whole audio files) or as a MIDI device capable of triggering other instruments in a DAW environment. Using its internal logic and mapping it with my own curated sounds, this joint effort truly surprised me with the results heard above.

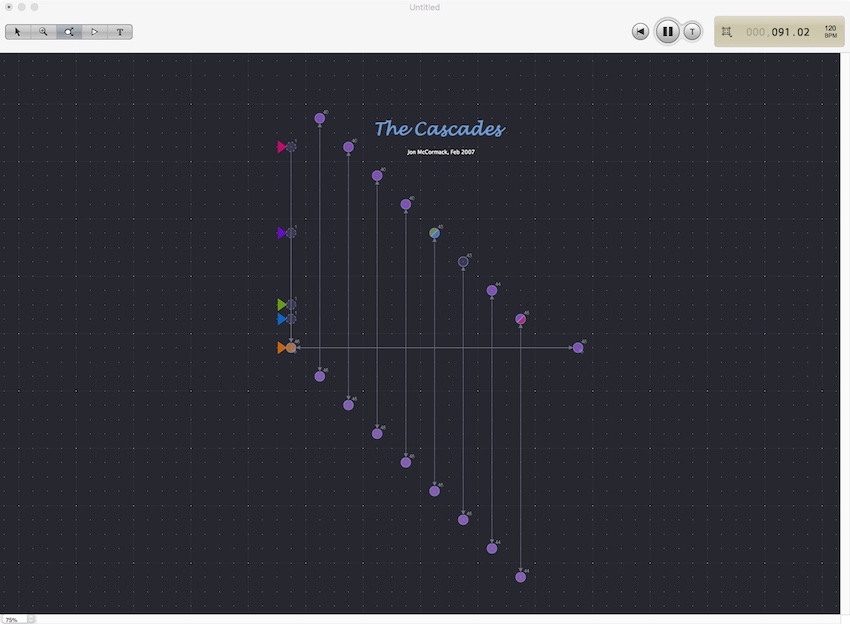

CEMA Research: Nodal Music

When I first fired up CEMA Research’s Nodal Music software I, truth be told, could not make heads or tails of it. What you’re staring at is a grid system. On that grid system you see something reminiscent of airline or train transit systems. Various nodes are visualized and connected. You press play and the whole grid system lights up, flashing, and beating to the sound of actual music playing. Neural networks — those networks that mimic our own human connections — form the basis of Nodal’s processes. It’s fascinating to see but hard to comprehend, at first.

After you read the manual, things become much clearer. Nodal Music breaks down musical events — let’s say a 1-bar 4/4 loop — into nodes. A four-to-floor melody would (in theory) be a square. In each square you’d span connecting lines four cells to denote four beats per note struck. From there, you can start weaving different nodes to do different things.

One node can run cycles through each note, on each of the four points. You can instruct that node to deviate and double back one beat. Or duplicate another node, connect it to this one, and have it affect certain beats. And you could have it say: “cycle within this node, from three different notes, and every time you select a different note, make sure to go into this other vector to play a certain musical cycle of indeterminate end.”

Nodal is a very visual animal that really opens itself to musical exploration and improvisation. It’s unique as a sequencer (which I imagine is what most would think it really is) but shines as a semi-self-working improvising instrument, capable of generating compositions that run the gamut from entirely alien to surprisingly musical. In the above sound example I loaded Nodal’s “The Cascades” example and simply captured a 6-minute performance of it using the same Ableton Live setup used in the previous section.

Wave DNA: Liquid Music

Now we’re moving to a line that blurs from generative to compositional tool. Wave DNA’s Liquid Music is generative music software because it’s able to come up with whole melodies, harmonies, and compositions based on determined musical ideas. To put it simply, it’s a MIDI melody and chord designer.

Let’s say, you run Liquid Music as a plug-in in your DAW. Fire it up and pick, via button press, that you want to create something in the key of C Major. Next, the software itself explodes with options.

Do you want to create a certain chord progression, I-V-VI-VII? Would you like to show simpatico chords that could expand on a melodic line? Do you want to change a certain chord into an arpeggiating line? Can you make this section “happy,” “sad,” etc.? Can you add some Latin or African-based or other, loose groove to this rhythm? From there it goes even deeper.

Liquid Music lets you begin with an inkling of an idea (sometimes no idea at all) and draw out that sketch into full compositions. Central to it is a painting tool that you can use to paint ascending or descending melodies. Once you do so, you use its built-in tools to carve out what you want that line to do.

If you don’t like how it’s going, you can always let Liquid Music surprise you — there’s a button for that. Once you do like something, you can drag and drop that idea straight into your DAW as a clip or MIDI file.

Beginning with the Key layer preset something in the key of “Bittersweet in Eb” I was able to carve out in pieces that above bit of Chicago House music on a French Filter bender you’re hearing in the example. The only thing I added was a drum machine (which the groove practically begged for).

Music Mouse – An Intelligent Instrument

Now we’re drilling toward generative systems that function more as “instruments” rather than pure sequencers. A very early software-based generative instrument, Music Mouse, developed by American music composer Laurie Spiegel in 1986, was originally meant to be used by musicians who had access to either a Macintosh, Amiga, or Atari computer. Today, a browser based version exists that replicates nearly the exact same feel of using the original (albeit using Google Chrome’s browser-based MIDI output capabilities).

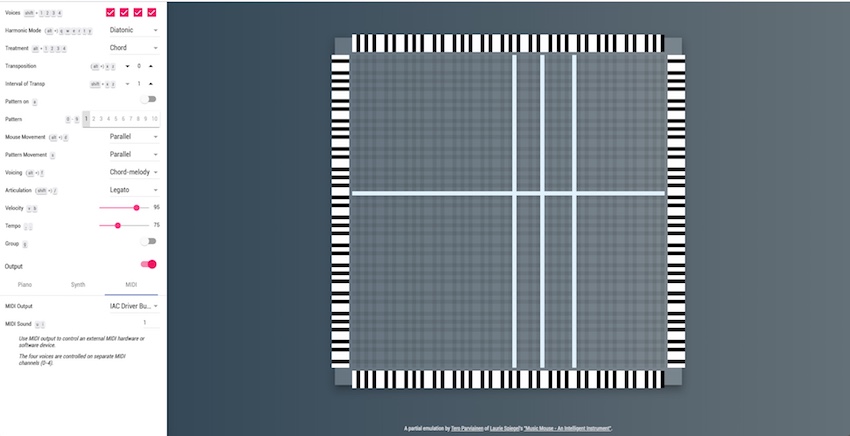

Based on the interaction between a grid and your computer’s mouse, Music Mouse automated the process of actually knowing chord and scale technique to movement. As you move over the grid (based on the positioning of your mouse), the software takes over. Constraining itself to determined melodic patterns, on a four-by-four border, Music Mouse follows whatever harmonic idea, articulation, and other musical techniques are set on the left side of the screen.

The beauty of Music Mouse is you don’t have to know a lick of music or technique to actually make something musical. It relies on the user — in this case, the mouse mover — to listen deeply and use keyboard shortcuts to instantly change voicing, articulation, harmonic scales, etc. — all the things that make music, without having to stop your computer, pick up a manual and sift through hundreds of settings (or theory) to get there.

Music Mouse features a unique mode where you simply leave the mouse on a part of the grid you particularly like the sequence of and it takes over. From there, hitting ‘a’ activates pattern mode, allowing you to arrange its not-so-random patterns in a musical way. This is something hard to describe as brilliant until you actually try it.

Move the mouse — you’re playing the instrument. Leave the mouse — the instrument “follows” your train of thought and takes over. The above song I created in the example was an impromptu jam using the same three instruments as before.

Audiomodern: Riffer

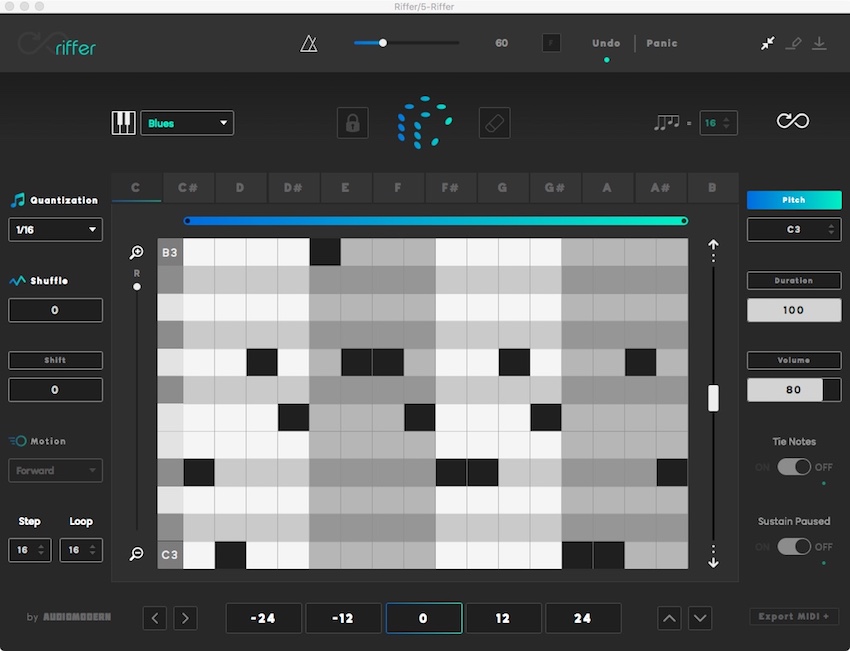

Audiomodern’s Riffer is the midway point between Liquid Music and Music Mouse. Far more spartan in nature, Riffer, like its name implies, creates riffs for you. This app exists in the PC and tablet realm, and evolved from a previous Max 4 Live plugin called Random Riff Generator PRO 2. Using riffs based in a certain key or scale, you’re then able to randomize each note’s duration, volume, and playback. Riffing on a riff, in a way.

Great for melodic patterns more than chordal harmonies, Riffer is as straightforward a bit of software as you can find that introduces you to “generative” musical ideas. Hit the dice and notes are randomly placed on the keyboard roll that are within the scale selected. From there on, you can adjust velocities, add some shuffle, and change the quantization of that pattern.

Riffer is great if you just want quick melodies that you can modify more to your liking. You’re able to tie notes together and sustain sections, much like you would in any other sequencer.

What makes it a truly generative monster is the ability to hit that infinity symbol and let Riffer simply generate scaled, random melodies for you. Once you hear something that sounds just right you can hit a lock icon and remove the infinity button. Voila! You’ve just “created” a new melody you can easily import to your DAW and use as a loop or send off to MIDI land.

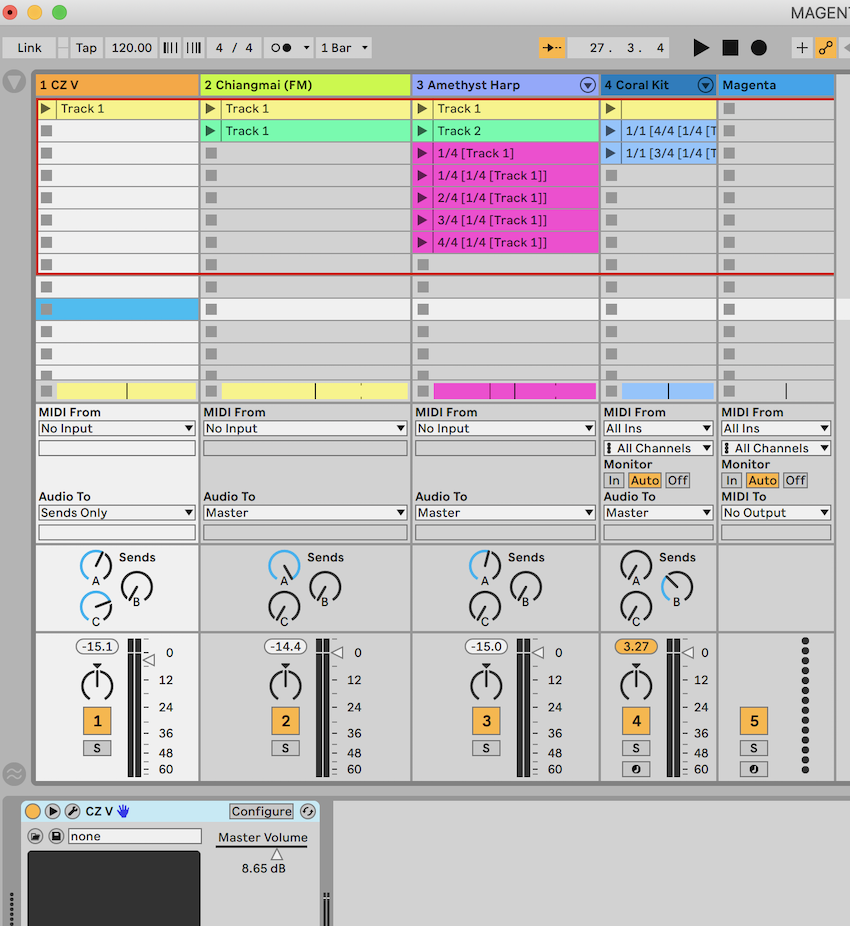

Google AI: Magenta Studio

The final bit of PC software I’ll introduce you to is Magenta Studio. Made by a small tech startup some of you might know of: Google. Magenta made its debut in some fashion via Google Doodle, introducing to the world both the musical ideas of J.S. Bach and of machine learning. Of course, Johann, or Mr. Bach, needs no introduction, but machine learning might.

Machine learning is a developing technology. In the case of Magenta Studio, it’s software constructed to be fed melodic information and to generate musical ideas based on multitudes of data (of musical nature) fed to it. In a perfect vacuum, it’s expected to observe and learn from the given data (chords, grooves, etc.) then extrapolate from that raw data what it’s being asked to do.

For a lay musician as myself — one not remotely adept at understanding something in software coding land — I imagine it as a tool to explore alternate melodies or to generate drum patterns loosely based on an existing melody.

Neural networks, software logic that revolutionizes AI (artificial intelligence) does behind the scenes things in Magenta Studio which, in theory, can take these ideas further in the future. Existing randomization relies on chance but generative software is attempting to behave like a sentient creature would. To have some inkling of that nascent, logical process (in music software form) is an interesting thing to try to comprehend and use.

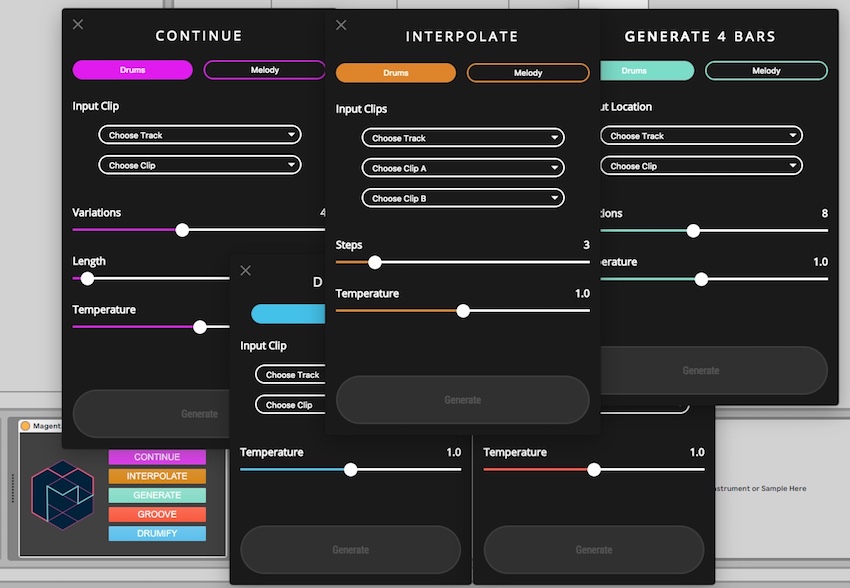

In Magenta Studio’s case, this kind of machine learning is being asked to do a few things: continue a melodic idea, take two ideas and blend them into one new idea, generate melodic or drum patterns from scratch, adjust the feel of an existing note pattern, or create a drum pattern out of a melodic note pattern. In a nutshell, that’s what the modules within Magenta Studio — Continue, Interpolate, Generate, Groove, and Amplify — do, respectively.

At the moment, the results of the generated ideas aren’t entirely musical. What you get out of Magenta’s tools requires you to curate the best creations. I did my best with its aid to help me “remix/rethink” the music created in the WaveDNA: Liquid Music section.

However, you can understand what it’s attempting to do — even if your own thought process expected more. Only time will tell if our fears of machine-generated music will ever pan out. In the next post we’ll look at tablet apps that use touch and other forms of generation to “create” music.

Images by author (with generative assist from Michael Bromley’s “Chromata“), music by MacBook Pro (with human assist by author).

Leave a Reply