I’m going to ask you to do something today: spare a thought for the old keyboard. Whether at school, in the living room or at a music store, everyone at some point in their life has plopped themselves in front of one and struck a note.

Intrinsically, we know how they work. On acoustic pianos you hit a key and behind the scenes a mechanism lowers a mallet/hammer onto a piano string, vibrating it, producing a sound. On a digital instrument, that note press triggers a digitally-induced sound. That black or white key has only one job: trigger something.

This is a fact of life, or so we thought. MPE (MIDI Polyphonic Expression) is a revolutionary new bit of technology that totally upends what we thought was written in stone. Today we’re going to look at what it is and how it is reimagining what a keyboard can do.

A Journey To The Old MIDI Standard

In 1984, MIDI itself was created as a digital standard to unite how electronic instruments could interface with each other and with digital computers. Before MIDI existed, musicians had to navigate treacherous waters trying to figure out how to make one proprietary control jack talk to or interface with another proprietary connection.

In the early ’80s, a consortium of musical instrument manufacturers from the likes of Sequential Circuits, Roland, and others, along with dedicated hardware engineers formed the MIDI Manufacturers Association. The MMA put into writing a standard that would dictate what messages all new electronic instruments going forward could use. Gone were the days of tearing your hair apart trying to get such instruments to work together.

The first MIDI iteration we all know and love (version 1.0), would introduce concepts like MIDI channels, Note On/Off events, Aftertouch, Pitch Bend, Velocity, Pressure, Sysex, and MIDI Time Code, to name a few. Now one keyboard could be used to control and interface with various synthesizers and computers using this shared language simply by using a MIDI cable.

As revolutionary as MIDI was, so were the little things it did behind the scenes. Certain vocabulary in its framework — Aftertouch, Pitch Bend, Velocity, and Pressure — expanded and codified on what electronic instruments could do, setting in motion a new way for musicians to interface with sound.

In the past, a key press had a certain journey. Depending on how hard you struck a key and its place on the octave range, you could affect the volume and the pitch of whatever sound came out of the instrument it was attached to. Unlike acoustic pianos, analog pre-MIDI-spec instruments tried to add additional expression into the mix.

If you’ve played a guitar or a wind instrument you know that placing your finger on a fret or a hole isn’t the end of that journey. On a guitar you can pull, pluck, wiggle, and slap a string and know it will affect the sound in some way. Wind instruments use your breath to affect the instrument’s pitch, volume, and sonority.

When electronic synth makers started to introduce separate knobs for pitch bends, for modulation, and to alter a keyboard mechanism to include sensors to pick up pressure, it was their attempt to introduce expression into a mechanical interface that never had it. Others like Don Buchla imagined new interfaces that were gestural-based, trying to move beyond all known conventions.

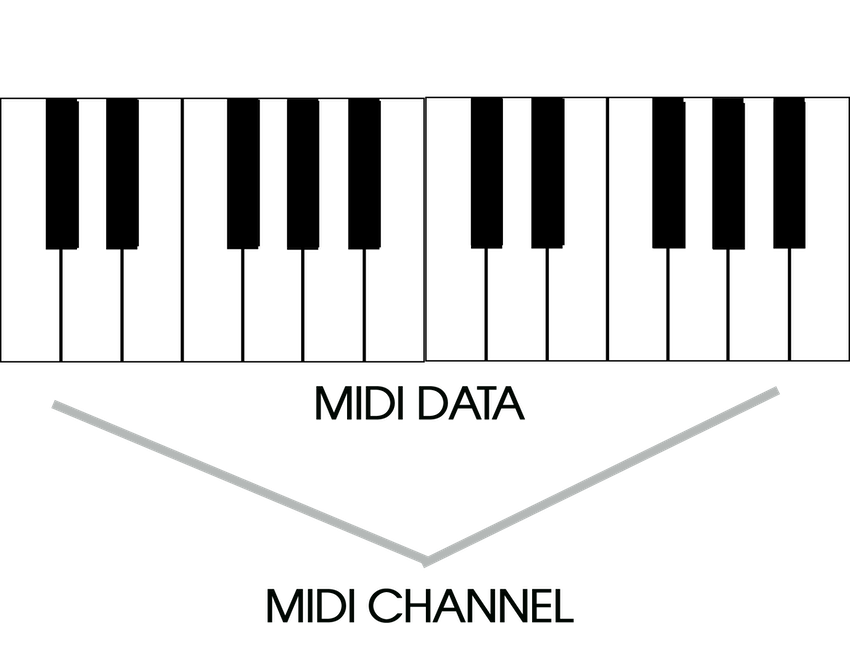

Initially, MIDI 1.0 tried to address these new technological advancements. Those new controls could now affect all synthesizers that spoke the spec. Through one MIDI channel you can transmit all that info to any other device listening in.

With the introduction of guitar MIDI controllers there came a conundrum: How do you apply monophonic controls to a polyphonic instrument? At that moment in time, all keyboard technology allowed was for Pitch Bend, Modulation, and Legato, (to name a few) to be applied equally to all notes being played from the instrument.

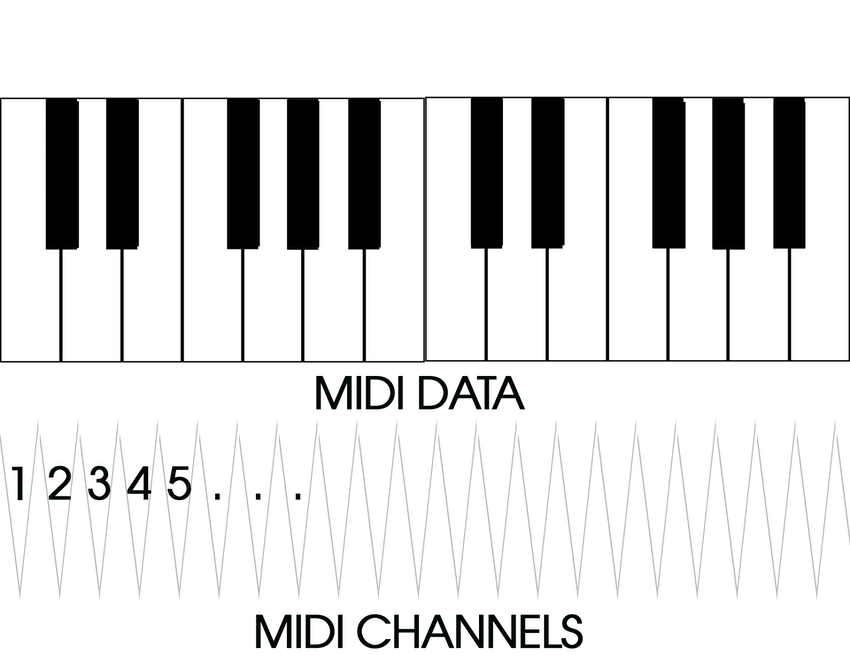

Guitars are/were different beasts. On each string of a guitar you can apply different expressive techniques. One string can be playing a vibrato while another might be ringing out openly. The MIDI spec had to find some flexibility to allow guitar MIDI controllers to work differently, to treat each string being picked up separately.

Thankfully, someone wrote into the spec something called “Polyphonic Key Pressure” and “Channel Mode Message,” allowing any guitar MIDI controller maker to dictate each string to have its own MIDI channel that can transmit its own expression, affecting soley that string. It is in that bit of spec where the foundations of something new would take hold.

The Rise Of MIDI Polyphonic Expression

As electronic instruments have evolved, so have cracks exposing some of the limitations of the original spec. Instruments like Roger Linn’s Linnstrument, Haken Audio’s Continuum, ROLI’s Seaboard, KMI’s K-Board, and the Eigenharp have introduced technological advancements to ye olde keyboard instruments, giving them the ability to sense more than just velocity and pressure. These instruments now look vastly different too.

Faces Still Melted – Marco Parisi Plays Purple Rain on ROLI’s Rise

Going beyond three-dimensional controls, we now include lateral movements (wiggling that key being an example) and enhancements that can sense/transmit per-note expression. These needed a name — they’d dub them multidimensional instruments — and an updated standard: MPE (MIDI Polyphonic Expression) that could understand what they’re trying to capture.

This new standard was proposed in 2017 and adopted shortly thereafter, codifying into the existing MIDI spec exactly how newly built synths, software and other interfaces can comprehend this language. Originally, MIDI CC (Control Change) and Pitch Bend parameters would apply that message to all notes coming through a MIDI channel. Now MPE devices would be assigned a channel per note played. MPE allowed each note to affect its own control — without influencing the others.

How MPE Works

MPE works by assigning one channel as the global channel or MPE Zone. This channels send out global messages like “sustain” and others still driven by existing monophonic control. Since the existing MIDI spec only goes up to 16 channels, its remaining 15 channels are used to transmit note (on/off) and expression (velocity, pressure/aftertouch, pitch bend, and brightness) messages. To account for the increased sensitivity to lateral and vertical movements — sliding up and down (as on a ribbon controller), that expression transmits separate control changes.

In this wild new world of futuristic MIDI control, new electronic instruments are getting closer (if not surpassing) the bevy of expression found in acoustic instruments. DAWs like Apple’s Logic Pro, Bitwig Studio, and Cubase support it. Meanwhile synthesizers like Ashun Sound Machine’s Hydrasynth, Modal’s Argon series, Madrona Labs Aalto or the freeware softsynth Surge, and a whole slew of new hardware and software are being created as we speak to take advantage of this new expression.

But enough of my yapping, let’s take a look at some examples of how it sounds in practice.

MPE Sound Examples

What you’re hearing above is a one-take improvisation using a sawtooth-based pad from ASM’s Hydrasynth. I played with two hands, applying varying amounts of pressure, while holding two different chords on a ROLI Seaboard Rise. Here you can really appreciate the volume and dynamic swell that’s only possible with the MPE standard.

My dream as a keyboard player is sometimes to play string instruments that are nearly impossible (if not impossible) to recreate via any VST, synth, plug-in or sample library. In this ASM Hydrasynth improv based on a patch dubbed “VHS Dreams,” I was able to modify its electric piano beginnings into something more approximating the expression and tonal qualities of a pedal steel guitar.

Then using the ROLI Seaboard Rise and MPE, I could mimic the slide and vibrato effects necessary to isolate single notes to play leads that don’t affect the chordal backing. MPE offers you a great starting point to get that much closer to playing instruments like these in a more authentic way.

Leave a Reply